Huge Snow Plow

As the Snowplow platform matures and is adopted more and more widely, understanding how Snowplow performs under various event scales and distributions becomes increasingly important.

As the Snowplow platform matures and is adopted more and more widely, understanding how Snowplow performs under various event scales and distributions becomes increasingly important.

Our new open-source Avalanche project is our attempt to create a standardized framework for testing Snowplow batch and real-time pipelines under various loads. It will hopefully also expand ours and the community’s knowledge on what configurations work best and to discover (and then remove!) limitations that we might come across.

At launch, Avalanche is wholly focused on load-testing of the Snowplow collector components. Over time we hope to extend this to: load-testing other Snowplow components (and indeed the end-to-end pipeline); automated auditing of test runs; extending Avalanche to test other event platforms.

In the rest of this post we will cover:

1. How to setup the environment

Avalanche comes pre-packaged as an AMI available directly from the Community AMIs section when launching a fresh EC2 instance. Simply search for snowplow-avalanche-0.1.0 to find the required AMI and then follow these setup instructions to get started.

Once the instance has been launched and you have SSH’ed onto the box you will need to setup your environment variables for the simulation:

- SP_COLLECTOR_URL: your Snowplow Collector endpoint

- SP_SIM_TIME: the total simulation time in minutes

- SP_BASELINE_USERS: the base amount of users that are pinging the collector

- SP_PEAK_USERS: the peak amount of users to load test up until

You can then go ahead and launch Gatling using either our launch script:

ubuntu$ ./snowplow/scripts/2_run.sh

Or you can launch it yourself:

ubuntu$ /home/ubuntu/snowplow/gatling/gatling-charts-highcharts-bundle-2.2.1-SNAPSHOT/bin/gatling.sh -sf /home/ubuntu/snowplow/src

ubuntu$ /home/ubuntu/snowplow/gatling/gatling-charts-highcharts-bundle-2.2.1-SNAPSHOT/bin/gatling.sh -sf /home/ubuntu/snowplow/src

After which you can select the simulation you wish to run:

Choose a simulation number: [0] com.snowplowanalytics.avalanche.ExponentialPeak [1] com.snowplowanalytics.avalanche.LinearPeak

Or to directly launch the simulation without any interaction:

ubuntu$ /home/ubuntu/snowplow/gatling/gatling-charts-highcharts-bundle-2.2.1-SNAPSHOT/bin/gatling.sh -sf /home/ubuntu/snowplow/src -s com.snowplowanalytics.avalanche.ExponentialPeak

The above can be useful if you wish to run Avalanche across many EC2 instances at the same time and would like to supply the launch command within the User-Data section in place of having to SSH onto the instance.

For very high throughputs, you will need to contact Amazon Technical Support to have them pre-warm your Load Balancer to be able to handle the throughput being generated by Gatling.

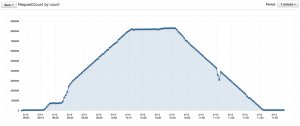

Note: in using Gatling we comfortably managed 825, 000 requests per minute from a single c4.8xlarge instance. For much more than this we recommend moving to running Avalanche from multiple instances.

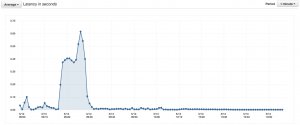

2. How to access results

Gatling generates results as a simple webpage. The directory these result pages are stored in is determined by the -rf flag being passed when you launch Gatling. When launching via the 2_run.sh script above, this is set to /home/ubuntu/snowplow/results.